HTML tags cannot be preserved in this format. However, the content can be rewritten while maintaining the original information without using HTML tags. Here’s the rewritten content:

Decision trees are a widely used and powerful supervised model that can perform either regression or classification. In this tutorial, we will focus on the classification setting. A decision tree is constructed using a sequence of simple if/else rules to make predictions on a data point. It can be visualized as a structure based on a sequence of decision processes with different possible outcomes.

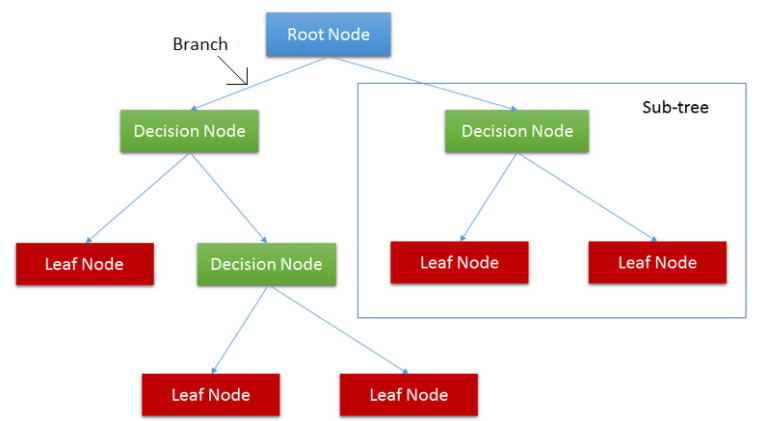

Starting at the root node, each decision node represents the basis for making a decision, with each possible outcome resulting in a branch. Each branch leads to either another decision node or a leaf node, which represents the final outcome. In constructing a decision tree, we need to understand how to build it.

There are three different ways to make a split in a decision tree: Information Gain, Entropy, Gini index, and Gain ratio. In this tutorial, we will focus on using entropy and information gain to identify which feature to split upon.

Entropy is a measure of randomness or uncertainty and is used to determine how randomly attribute values are distributed. Information gain is a measure of the importance of an attribute. By calculating the entropy of the distribution of labels and the entropy of each attribute, we can determine the attribute with the highest information gain to use as the root node.

To prevent overfitting, we need to establish stopping criteria for the growth of the decision tree. Commonly used stopping criteria include a minimum information gain threshold, a maximum tree depth, and a minimum number of samples in a subtree.

Finally, to implement a decision tree using scikit-learn, we can use the DecisionTreeClassifier class from the sklearn.tree module. We can load the iris dataset, split it into training and testing data, fit the classifier on the training data, predict the labels for the testing data, and calculate the accuracy of the predictions.

In conclusion, decision trees are powerful classifiers that are constructed recursively in a top-down manner. They consist of root nodes, decision nodes, branches, and leaf nodes. Various stopping criteria can be used to prevent overfitting. You can find the code for this tutorial in this Github Repo.