The recent advancements in artificial intelligence, particularly in deep learning, have been remarkable. This is highly encouraging for those interested in the field. However, evaluating the progress towards achieving human-level artificial intelligence is much more challenging. There are several reasons why evaluating artificial intelligence is difficult. One of the main barriers is the lack of consensus on the essential criteria for intelligent machines, making it difficult to compare different agents. Despite some researchers focusing on this topic, the AI community would benefit from more attention on evaluating AI.

Methods for evaluating AI are crucial tools for assessing the progress of developed agents. However, the comparison and evaluation of different roadmaps and approaches for building these agents have not been explored extensively. This type of comparison is even more challenging due to the vague and limited formal definitions within forward-looking plans. However, we believe that in order to identify promising areas of research and avoid dead-ends, it is necessary to compare existing roadmaps in a meaningful way. This requires creating a framework that defines processes for acquiring important and comparable information from existing documents outlining these roadmaps. Without a unified framework, each roadmap may differ not only in its target but also in its approaches, making it impossible to compare and contrast.

In this post, we provide an insight into how we, at GoodAI, have started to address this internal problem of comparing the progress of our three architecture teams and how this could be scaled to comparisons across the wider AI community. Although this is still a work-in-progress, we believe it would be beneficial to share these initial thoughts with the community and initiate discussions on this important topic.

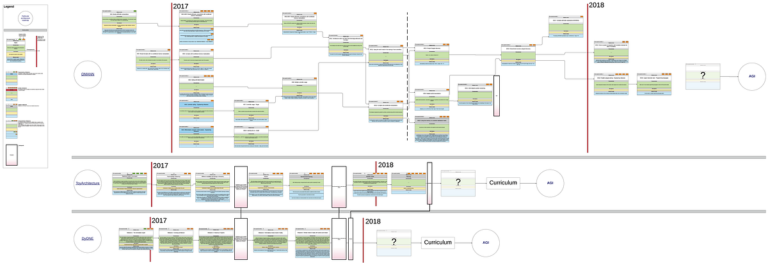

In the first part of this article, we present a comparison of three GoodAI architecture development roadmaps and discuss a technique for comparing them. The main objective is to estimate the potential and completeness of each architecture’s plans in order to direct our efforts towards the most promising one. To manage the addition of roadmaps from other teams, we have developed a meta-roadmap that outlines the general plan for the development of human-level AI. This meta-roadmap consists of 10 steps that need to be achieved in order to reach the ultimate goal. We hope that most of the diverse plans align with one or more problems identified in the meta-roadmap.

Next, we attempted to compare our approaches with that of Mikolov et al. by assigning the current documents and open tasks to problems in the meta-roadmap. This proved to be useful as it revealed which aspects could be compared and highlighted the need for different comparison techniques for each problem.

Our three teams at GoodAI have been working on their architectures for a few months. Now we need a method to measure the potential of these architectures in order to allocate resources more efficiently. It is still challenging to determine which approach is the most promising based on the current state, so we asked the teams to create roadmaps for future development. Based on the responses, we have iteratively unified the requirements for these plans. After multiple discussions, we have established a structure that includes milestones, time estimates, characteristics of work or new features, and tests for these features. We have also included checkpoints to enable comparisons between architectures during the development process. This provides us with basic tools to monitor progress, assess the achievement of milestones, and compare time estimates. We are still working on a unified set of features required for an architecture.

The comparison of the plans revealed that while the teams have rough plans for more than a year ahead, the architectures are not yet ready for any curriculum. Two architectures follow a connectivist approach, making them easier to compare. However, the third architecture, OMANN, manipulates symbols, allowing it to perform tasks that are difficult for the other two architectures. Therefore, no checkpoints for OMANN have been defined yet. We consider the lack of common tests as a significant issue with the plan and are exploring changes to make the architecture more comparable to the others, even if it may cause some delays in development.

We attempted to include another architecture in the comparison but were unable to find a detailed document describing future work, except for one paper by Weston et al. However, upon further analysis, we realized that the paper focused on a slightly different problem than the development of an architecture.

To address the problem of developing an intelligent agent, we consider the necessary steps and make a few assumptions. We acknowledge that these assumptions are somewhat vague, but we aim to make them acceptable to other AI researchers. We propose a meta-roadmap that outlines the required steps and their order. This includes developing a software architecture as a part of an agent in a world, defining tasks or rewards for the agent, adapting to an unknown/changing environment, and ultimately passing a well-defined final test to demonstrate intelligence. Prior to the final test, there needs to be a learning phase, which includes a curriculum. Using these assumptions, we present Figure 2, which illustrates the meta-roadmap of necessary steps.